Bad blogger me, late as usual.

On Thursday I got back from a longer trip to CERN, Geneva. The purpose of the trip was to sit shift for the HLT subsystem in ALICE, on behalf of the University of Bergen. While not a prerequisite for completion, its was part of my senior project.

Due to the whole volcano ash thing, we had to take the train from Tønsberg, Norway to Geneva, Switzerland(!!). Actually we didn’t have to, but we wanted to go, and after 4 days of “ash clouds, maybe there will be a flight, maybe there wont” we decided to just get there on our own.

The trip turned out a bit less smooth because the ordinary night trains from Copenhagen to Basel were full, so we had to wait 8 hours in Copenhagen and switch between several small regional trains in Denmark in the middle of the night. But hey, we got to see Copenhagen also 😉

The mainline news coverage of the volcano ash business has been all but sickening, but the following little comic made me laugh hard. Especially because of the supposed-to-look-icelandish language used:

‘Put 30 billion euro in the garbage can in front of the Icelandic embassy tonight, and we will turn off the volcano! Don’t call the police!’

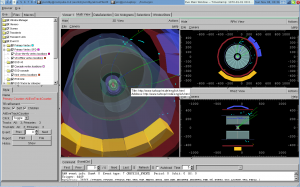

Down at CERN, most of the shifts were pretty uneventful as there was seldom “stable beams”. The beam refers to the particles being accelerated (in the LHC in ALICEs case), and stable means that collisions are happening in a controlled manner so that you can actually start data-taking.

So, when you’re not taking data, sitting shifts mostly means noting down problems that occur with your subsystem, and possibly notifying the on-call expect if deemed serious enough. Actually, being a shifter on HLT should be like that all the time, as it is supposed to be a completely autonomous system in this aspect.

I got to work a bit on my senior project, a bit on MyPaint, a bit on other things, but didn’t really get to be as productive as I would have liked. Especially on the night-shift, I found it hard to do any serious work. Strange, as I often have my most productive times after midnight when at home.

One thing I regret the most is packing too much clothes, and not bringing a camera. Even if we didn’t really do a lot of very exciting stuff, it still would have been nice to have pictures; Capturing the moment, binding the state of mind at that instant to something physical, for easier retrieval at a later time.