At home I have a physical server that runs as a virtualization host (with kvm, lxc, qemu, libvirt and nfs), while the actual services I use run in virtualized servers, mostly as LXC containers.

One of these runs MPD and outputs music to the sound card of the host in addition to a web stream that I can access from anywhere in the world. Since the host and guest are using the same kernel, passing the soundcard on to the guest was as simple as bind mounting /dev/snd of the host into the root filesystem of the guest. I’m not quite sure how ALSA handles concurrent access, but it could be that this also works if several guests (or the host+guests) are playing to the same device.

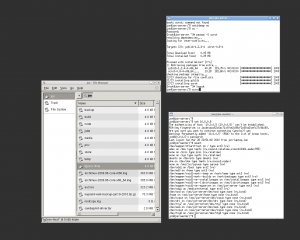

But what is required to have working Xorg inside a cgroup/lxc container? Can we just do something similar? Turns out we can. Basic Xorg with the vesa driver and the old style input (no hal input hotplugging) works if you bind mount in /dev/tty7 (or another tty), /dev/mem, /dev/dri and /dev/input. Here is the obligatory screenshot:

Xorg running on the host graphics card from within a cgroup

So whats my motivation for trying this out? Curiosity mostly, but in a multi-seat system it could be nice to have each seat run as separate OS in a lxc container. It would allow each seat to run different distributions (within certain limits) and make sure that resources (CPU time, memory) are distributed evenly. And to provide isolation for security reasons.

That we need access to /dev/mem is currently a deal breaker as far as security goes, because it means that whoever gains root access in one of the guests will have control over the host (and thus the other guests) because they can modify the physical memory. There has been some interest in running Xorg as a unprivileged user, and that might address this issue. Locking it down with SELinux or similar might also be possible.

Obviously for multiseat you will need multiple screens somehow, either by using multiple cards or by sharing a single card (using one output each). I don’t immediately see any reason why the former should pose any problems, and with Dave Airlies recent work on multiple X servers on a single card that might also be possible in combination with cgroups/lxc.

I plan to test that this also works with the Intel driver, and perhaps also the Nvidia propriatary one with time. And perhaps try to get input with hal hotplugging working.

Very interesting, I would like to learn more.

I also try to start Xorg in several LXC, but while it failed

Hello Jon. This is interesting and is something that I am also investigating. If LXC can do this then it’s another nail in the coffin for OpenVZ as far as I am concerned.

Now, would it be possible to give one container tty7 and another tty8 and have two completely different distributions running their own X desktop that can be switched between using CTRL+ALT+F8 and CTRL+ALT+F7

Hey John, nice to see some interest. As you probably known, on a single host, running one Xorg session per tty is trivial. But I’m not sure how much more complicated it gets when you want each in its own container. I would imagine it requires access to the framebuffer device at least, but that might be simple. I can only suggest trying it out, asking questions (on the lxc-users mailing-list perhaps) if you get stuck. And report back once you get it working (or know what it would take) 😉

I’d like to research some more and improve the situation for running desktops in LXC containers, but it’s currently far from the top of my TODO list.

Hi, good to see someone else is interested in doing this. If I accept the “risk” of access to /dev/mem (because all the containers are mine and on my own PC) then it would be good to get this to work.

However, I can’t bind mount /dev/mem into the container. I have tried in the container fstab, like this: “/dev/mem /srv/lxc/arch/dev/mem auto rw,bind 0 0” but container startup fails because /dev/mem is not a directory.

I tried giving access via cgroups like this: “lxc.cgroup.devices.allow = c 1:1” but container startup fails.

I tried creating /dev/mem inside the container (as root) “mknod /dev/mem c 1 1” but this gives “mknod: `/dev/mem’: Operation not permitted”

I wondered if you had any luck getting X in an LXC container and could share some guidance…?

You will have to create the directory “/srv/lxc/arch/dev” and then possibly a dummy file “/srv/lxc/arch/dev/mem” before you bind mount like that. mknod should not be required.

Also, I realized that access to /dev/mem should only be necessary when using VESA (and possibly some of the prop. drivers). If you’re using a DRI based driver, access to /dev/dri should be enough, though I have not found the time to verify this.

Hi, just to let you know…

I have two X desktops running independently on two virtual terminals (currently one on the host and one in a container).

I have ALSA working in both for multiple users, but only from either the host or the container at any one time. I am working to try and get over that problem.

I’ve been working with MultiSeat systems since 2004 and a sane way to do this has been sorely lacking. I think virtualization (be it LXC or my preference, OpenVZ) gives us the opportunity to put each “seat” in a nice, neat, secure box of resources for once.

I John. What did you use to configure your sound?! I have created an environment that let you open multiple X11 screens over ssh forwarding, but there is no sound yet. I would be very grateful if you can help me out. You can use what I created from here: https://github.com/rogaha/docker-desktop

OBS: Docker is a tool that allows you to automate and manage LXC containers! You guys should also check it out http://www.docker.io

Thanks,

Roberto

Hi Roberto,

I just did a bind mount of /dev/snd in the host into the guests /dev/snd, and in there things used ALSA normally. But John a couple of comments up mention that this only works from one OS at a time.

Do note that this is a very old post. I think that today a better strategy is probably to use systemd for the “containerization”, instead of using LXC.

For sound I think that having a “master” PulseAudio server which handles multiplexing. The different guests could either access it directly, have their own PulseAudio servers which sends the soundstream to the master over network (depending on the required degree of separation).

This is entirely untested from be, but I known the systemd guys want to have sane multiseat systems, and that they have come a long way with this.

Jon…

Read the post & the comments. I also cannot get sound to work in an lxc container but will admit that I may not be doing things right or may have left something out.

I am using Ubuntu 13.10 and LXC.

On the host I do the following:

$ sudo mount “-o rw,bind” “/dev/snd” “/var/lib/lxc/CN_name/rootfs/dev/snd”

I then edited the /var/lib/lxc/CN_name/config and added:

lxc.cgroup.devices.allow = c 116:* rwm

I then start the container CN_name ( $ sudo lxc-start -n CN_name)

after this I still cannot get any sound out of the container.

I did a check:

$ lspci -v | more

and it comes back with Capabilities Denied for all PCI devices (re my hosts sound card).